Kameleoon offers two types of dynamic traffic allocation algorithms to help you maximize experiment performance: Multi-Armed Bandits (MAB) and Contextual Bandits. Both approaches use real-time performance data to allocate more traffic to better-performing variations—but differ in how they treat user data. This article explains how these algorithms work, when to use them, and how to activate them in your experiments.

To enable dynamic traffic allocation, simply create an experiment or open an existing one.

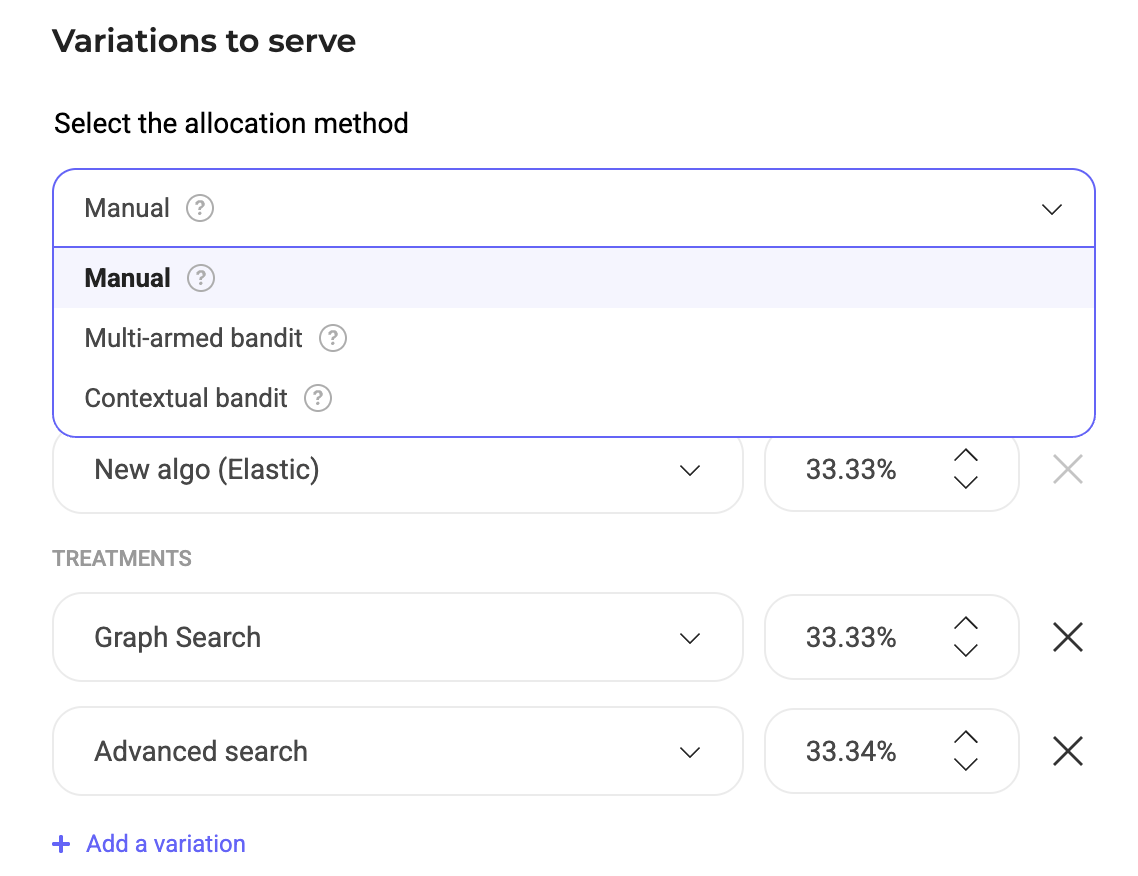

In the the Variation to serve section, select your preferred allocation from the dropdown. there, choose between Multi-Armed Bandit or Contextual Bandit optimization, depending on your needs.

Note: Please note that the allocation update is based solely on the lift of the primary goal of the test.

Multi-Armed Bandit

When using dynamic allocation (MAB), exposure rates cannot be manually edited. Instead, Kameleoon will automatically measure improvement over the original variation and estimate the gain in total conversions using the Epsilon Greedy algorithm. This process repeats hourly for the algorithms to allocate traffic to variations based on their observed performance. In doing so, the MAB pushes traffic towards higher-performing variations, even without statistical significance, which can drastically reduce the time it otherwise takes to reach your winning or losing variations.

Note: Auto-optimized experiments rely on the original variation (“off” for Feature Experiments) to optimize the deviations. If the (off) variation does not receive any traffic, the deviation may not be updated, causing the allocation to remain at 50/50 despite a clear winning variation.

Do note that MABs do not rely on a control or baseline experience. Unlike A/B tests, MABs focus on improvement over an equal initial allocation and dynamically adjust traffic allocation based on real-time performance. Thus, in cases where statistical analysis is of less importance and ‘exploration’ time needs to be cut short, MABs can be useful as they are more ‘exploitation’-focused.

Contextual Bandits

Contextual bandits dynamically optimize traffic allocation in experiments using machine learning. They adapt in real-time to redistribute traffic based on variation performance and user context to maximize effectiveness.

Key differences exist between multi-armed bandits and contextual bandits. Understanding these differences is critical for choosing the best method for your experiments:

- Multi-armed bandits optimize traffic distribution among multiple variations (arms) to maximize a defined goal, such as click rates or conversions. They treat all users equally, with no distinction based on user attributes. This makes them ideal for scenarios where user-specific data is unavailable or unnecessary, and the focus is on finding the best-performing variation for the overall audience.

- Contextual bandits incorporate additional user-specific data, like device type, location, or behavior, into decision-making. They allow more personalized decisions, tailoring variations to specific users for improved outcomes. The variability introduced by user attributes allows contextual bandits to optimize decisions in dynamic environments.

So, while multi-armed bandits optimize traffic allocation uniformly across users, contextual bandits leverage contextual data to make more personalized, data-driven decisions.

You can read here to learn more about how Multi-Armed Bandit optimization works, or read our Statistical white paper to dive deeper into the technical details of how our M.A.B algorithm works.