Internet bots are software applications that run repetitive, automated tasks over the Internet. This behavior may impact your experiment and personalization results, as bots don’t behave like real visitors and inflate the volume of traffic (i.e., visits) on your site, effectively biasing your conversion metrics (KPIs/goals). Therefore, it is essential to remove bot traffic from your campaign results to make your data more accurate.

Kameleoon has two main methods for excluding bot traffic from your campaign results:

- We detect known bots and spiders with the IAB/ABC International Spiders and Bots List and do not count them in our analytics.

- We developed proprietary internal algorithms to detect bot traffic on websites. Kameleoon automatically filters these bots out of your campaign results. When building a visit from visitor events, we reject a visit from our statistics if we detect it as an outlier (e.g., bot, troll, tracker bug, etc.). We consider a visit an outlier if at least one of the following conditions is met during a visit:

- Number of events > 10K.

- Duration > 2 hours.

Server-side experiments are more vulnerable to bot traffic. You must pass the user agent to be filtered by Kameleoon when running server-side experiments. Please refer to each SDK documentation for the right implementation. No additional code is required to enable bot filtering for client-side SDKs, as the Kameleoon SDK automatically includes the user’s user agent in the outbound request.

To activate bot filtering, use the menu on the left to access the Projects page of the Admin menu.

Then go to your project’s Configuration page.

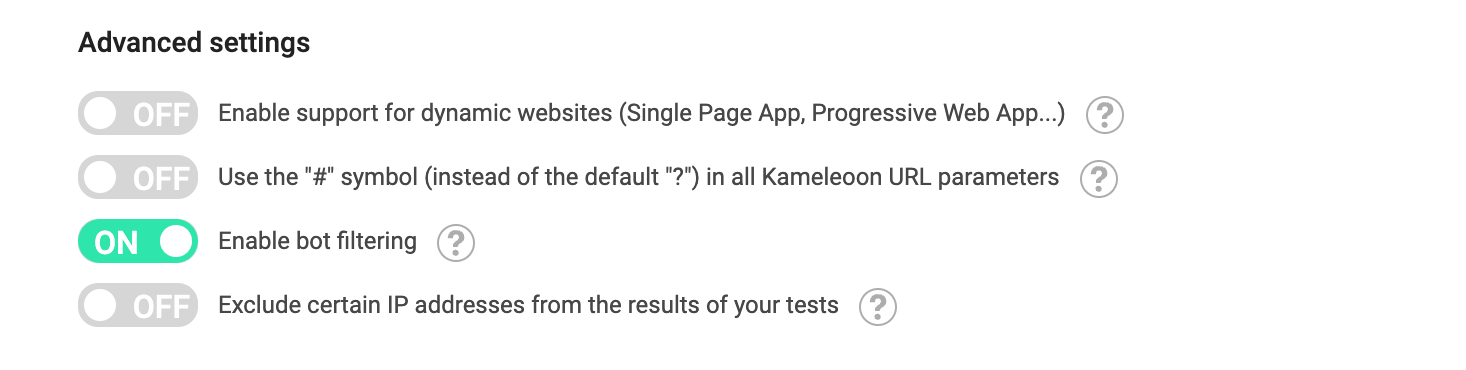

In the Advanced settings, enable bot filtering.